DPDK - Assigning CPUs for Poll Mode Driver (PMD)

OVS-DPDK is a critical requirement in the NFV world with OpenStack. TripleO has integrated the deployment of OVS-DPDK with the newton release, till then it used to be a manual step post deployment. There are a list of parameter which has to be provided for the deployment with OVS-DPDK. One such parameter is NeutronDpdkCoreList. This blog details the factors to be considered in order to decide the value for this parameter.

CPU List for PMD

NeutronDpdkCoreList parameter configures the list of CPUs to be used by the Poll Mode Drivers. Following criteria should be considered to deliver an optimal performance with OVS-DPDK:

- CPUs should be associated with NUMA node of the DPDK interface

- CPU siblings (in case of Hyper-threading) should be added to the together

- CPU 0 should always be left for the host process

- CPUs assigned to PMD should be isolated so that the host process does not use those

- CPUs assigned to PMD should be exclued from nova scheduling using NovaVcpuPinset

Fetching the Information

lscpucommand will give list of CPUs associated with the NUMA nodecat /sys/devices/system/cpu/<cpu>/topology/thread_siblings_listcommand will give the siblings of a CPUcat /sys/class/net/<interface>/device/numa_nodecommand will give the NUMA node associated with an Interface

Sample Configuration

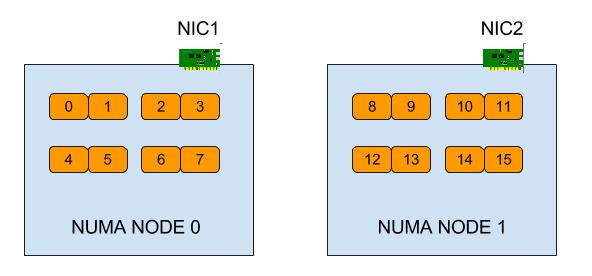

For better explanation, let us consider a baremetal machine with below specification. * A machine with 2 NUMA nodes * Each NUMA node has 4 cores and a 1 NIC * Hyper-threading value as 2, so each core is running 2 threads (siblings) * Each NUMA node eventually contains 8 CPUs

To configure NIC1 as DPDK, following should be configuration

NeutronDpdkCoreList: "'2,3'"

NeutronDpdkSocketMemory: "'1024'"

To configure NIC2 as DPDK, following should be configuration

NeutronDpdkCoreList: "'8,9'"

NeutronDpdkSocketMemory: "'0,1024'"